Yes, ChatGPT has changed the world. But is it a silver bullet that can solve every problem you throw at it? It all depends on your use case. Within customer support, that means figuring out how to best unlock this new technology’s potential for natural conversations and efficient processes behind the scenes.

What problem are you trying to solve with generative AI?

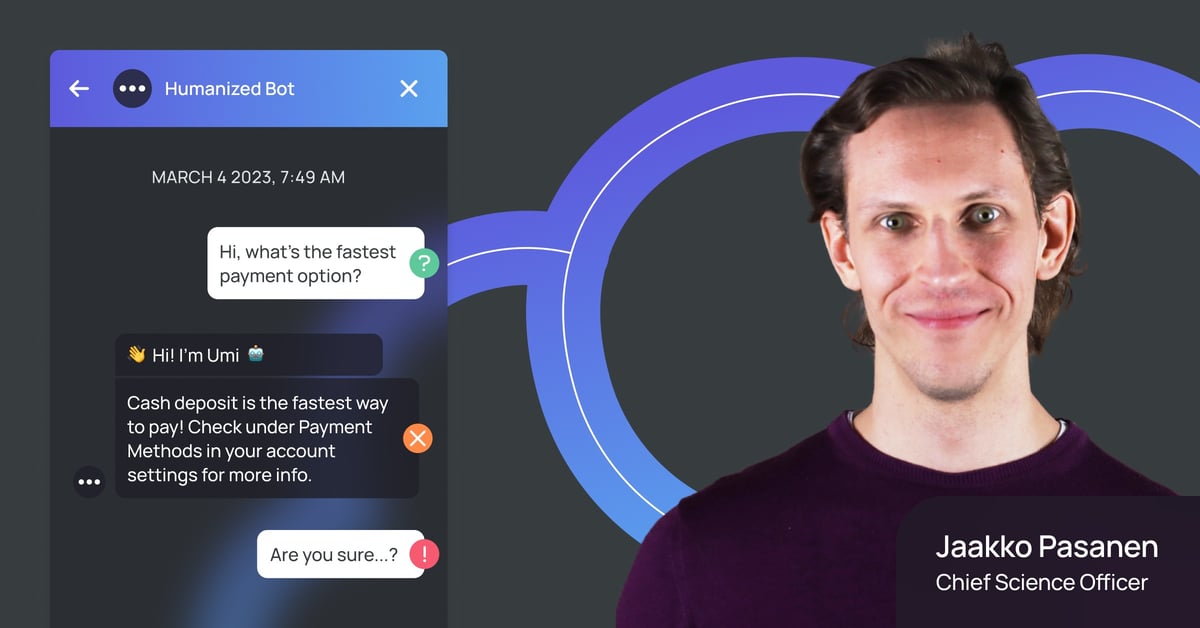

Our Chief Science Officer Jaakko Pasanen has been having déja-vu experiences as of late. “I remember in the early days of Ultimate, people were saying, ‘You have AI’, and giving us all sorts of problems to solve. And I’m seeing something similar happening with LLMs today. People think that, just because you have an LLM, you can just give it a problem, any problem, and it’ll solve that problem.”

And he’s onto something: Like confetti on the world’s most glamorous dance floor, the initial hype around ChatGPT, Large Language Models (LLMs) and generative AI is slowly settling. Experts and investors are taking a look around – and growing nervous: Scores of start-ups have recently shot out of the ground that seem to vaguely work with “generative AI”, but many of them are fundamentally flawed in their approach: They’re focusing on a new, shiny tool. Not the problems that tool is best designed to solve.

We’re happy to say that’s not been the case here at Ultimate. Over the past few weeks, our team of in-house AI researchers and product developers have been testing the value that generative AI can add to customer support. They’ve identified use cases, overcome initial challenges, and emerged from the process with a bigger, better product suite in hand.

“The question you have to ask yourself is, what is your application designed to do? What problem are you looking for the LLM to solve? Different applications have different requirements and different risks.”

— Jaakko Pasanen, Chief Science Officer, Ultimate

In session 4 of our Humanized AI Series, our co-founders Reetu and Jaakko discuss the good, the bad, and the raw in-between that got us there.

Examples of LLMs and generative AI in customer support

In our previous session, we covered two types of use cases for LLMs and generative AI in customer support: creating more natural conversations with customers, and making behind-the-scenes processes even more efficient.

Customer-facing applications could use generative AI to:

- Add a conversational LLM layer on top of your underlying intent-based architecture to make chat conversations more natural and human-sounding

- Pull info from your pages, like your knowledge base, CRM Help Center, FAQ page or any other company page, to provide instant, up-to-date information to frequently asked questions

Behind-the-scenes processes could use generative AI and LLMs to

- Structure, summarize, and auto-populate support tickets

- Transform factual replies to customer requests into a specific tone of voice

- Sort customer data into intents

- Craft example replies for conversation designers to use as is or as inspiration to brainstorm dialogue

As we’ve started testing these use cases in our product, we’ve seen two sets of challenges emerge: One based on the generative nature of LLMs, the other based on the resource-intensive nature of hosting such large amounts of data.

Challenge #1: Generative AI chatbots making up facts

We’ve all seen – and chuckled at – ChatGPT’s ineptitude when it comes to delivering facts. Many of us may also have marvelled at its canny ability to cover up this weakness. But while that’s fine in a recreational context, it’s unacceptable in customer support.

LLMs start making up information when the data they’re trained on either doesn’t contain any information about the question asked, or contains information that’s irrelevant to the question.

In customer support, one example could be a customer asking for the “fastest payment option” for a purchase. If your knowledge base doesn’t contain any mention of the fastest payment option, you’d run the risk of your LLM-powered bot "hallucinating", or making up, a random answer to this question.

So how can you implement software like ChatGPT in your support (without it going off on random tangents about Elon Musk)? Here’s a demo showing how we’ve solved this issue for the use case of using LLMs to draw information from an FAQ-type knowledge base.

Solution: Keep your model focused with an LLM system

“Even though large language models have a lot of capabilities for hallucinating, they tend to behave very well when they have the relevant information available. So the #1 thing you need to do is make sure they’re receiving relevant information.”

— Jaakko Pasanen, Chief Science Officer, Ultimate

Making sure your LLM model sticks to the task at hand (read: providing specific information to customers) is possible. But it requires optimizing the environment that you’re plugging that model into. In other words, you’ll want to create a functional LLM system. Here are the main ingredients you’ll need to make it work:

- Providing the right training data: The key with training data in customer support isn’t necessarily quantity, it’s quality. Make sure the language model (aka your knowledge base) feeding your chatbot is relevant to the topics your customers care about.

- Grounding your model with a search engine: Additionally, you can steer the way your LLM navigates your knowledge base with an internal custom search engine that only lets the model access information that’s relevant to the questions asked

- Fact-checking: After using your LLM to generate an answer, you can use a different model to verify if the answer given was correct.

“We need to think of AI in customer support as not just one neural network, but a whole brain, where different parts of the brain handle different tasks.”

— Jaakko Pasanen, Chief Science Officer, Ultimate

Challenge #2: Implementation and upkeep of LLMs can be resource-intensive

Hosting your own LLM model

Their size is what has made LLM chatbots so groundbreakingly accurate and human-sounding. But it’s also what can make them hard to run and maintain.

“Large language models – especially the ones that create the biggest hype – they really are large. Very, very large,” says Jaakko. “And that can cause significant engineering challenges.”

For example, hosting a single LLM can run up costs in the tens of thousands. And many cloud providers might not be able to provide the immense amounts of storage space required for an LLM to run smoothly.

The larger the model, the higher it may be at risk for issues like latency, meaning that it takes the model a long time to process information. This can be a problem in customer support, where customers need not only natural sounding and correct replies, but also expect them instantly.

What about APIs like Open AI's?

Ever headed to the OpenAI website with a new idea for ChatGPT, only to find out it was down for the day?

Try having two demos with customers lined up. It’s what happened to our product team when we relied on OpenAI’s ChatGPT API to test out generative AI in our product suite.

“It’s a good example of the perils of relying on an open-source third-party API. If it goes down, and there are no service-level agreements (SLAs), there’s not much you can do,” says Reetu.

APIs can be a simpler solution to hosting your own LLM, but the downside is that they may be less reliable.

The cost of single API requests can also add up compared to hosting your own model. That’s why unlocking the potential of LLMs requires a careful calibration of technical reliability, scalability, and what makes the most sense financially.

Solution: "Reasonably-sized" language models

So if we remember to choose our tools based on our needs, and if large language models are so hard to implement, do we actually need LLMs in customer support?

“Especially when it comes to answering questions and natural language understanding, the models do scale. So we do see better performance with larger models,” says Jaakko.

But size is only one among several factors that makes or breaks an AI model’s performance. As mentioned before, the data a model is trained with plays an almost equally important role, as does its overall infrastructure.

“With the correct training data, I think we can get very good results with a reasonably sized model. It doesn’t need hundreds of billions of parameters, but maybe tens of billions.”

— Jaakko Pasanen, Chief Science Officer, Ultimate

LLMs and generative AI in customer support: A huge leap forward, but not the last leap

The hype around generative AI is real, there’s no denying that. But at the same time, LLMs are not ready to be implemented blindly – especially when customer satisfaction is at stake.

But remember: It’s been barely 3 months since ChatGPT-3 first came out.

The pace of advancements in the field of generative AI is nothing if not astronomical.

“Just yesterday I saw that Stanford released the fine-tuned version of the new LLaMa model [which is also an LLM], which basically now fits into the memory of a phone,” says Reetu. “That’s the exciting part. These models are gigantic, but they’re getting better – within weeks, sometimes days.”

And with all the challenges we’ve faced over the past few months, even an in-the-weeds AI researcher like Jaakko can’t help but feel optimistic about the tech behind ChatGPT:

“I feel very excited. There’s reason for optimism – but you need to have a good sense of realism mixed in there.”

And that’s about as much full-on buoyancy as you’ll get out of a Finn – so it’s gotta mean something.