When building out a new product it can be hard to know when the time is right to stop aiming for perfection and let your creation fly. In mid-April, we took the plunge and launched UltimateGPT (you can watch the full product launch event recording here). Now that our LLM bot is out in the world, we’ve been listening to customer feedback and iterating fast.

The innovation behind ChatGPT has changed our industry forever. And as the technology continues to mature, new applications and use cases will emerge in the customer support sphere. We’re nowhere near done with our LLM journey — but it’s time to take a beat and reflect on what we’ve learned so far.

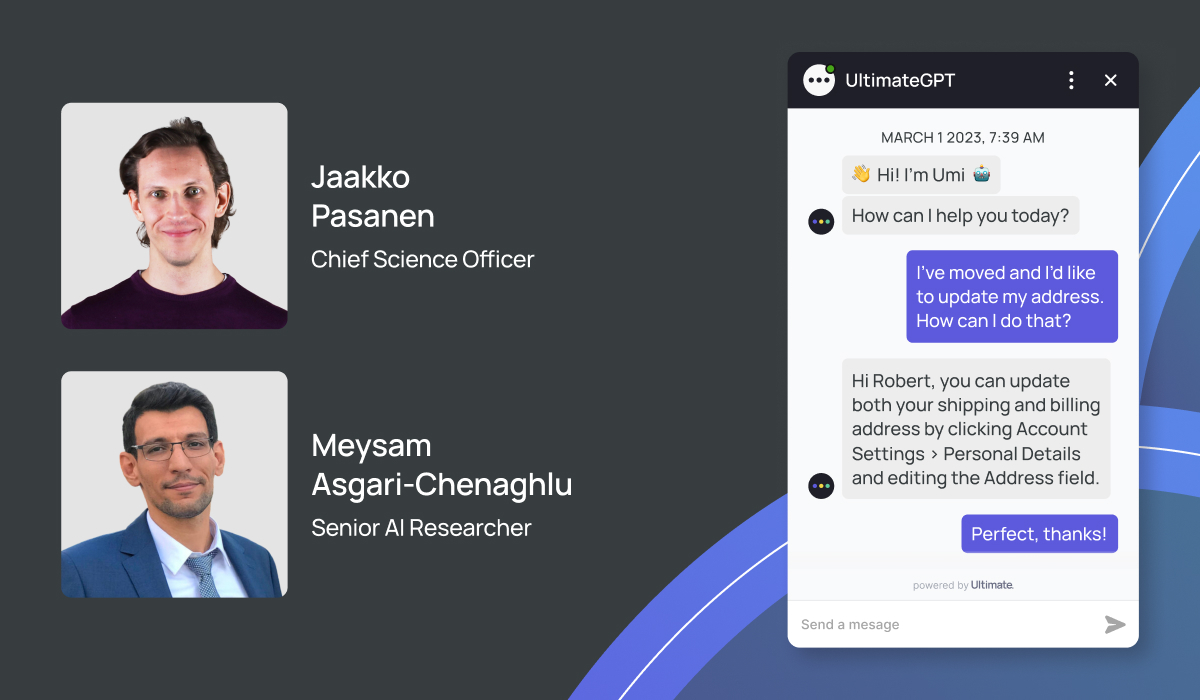

You can watch the full 30-minute recording here, or read on for a summary of key insights from this conversation between Jaakko, Meysam, and our CEO Reetu Kainulainen.

The challenges we overcame, and the ones still ahead

One of the biggest challenges is how many different technical aspects exist within this product. UltimateGPT has a complex pipeline (for non-techy folks, that means there are many stages the data moves between within the AI model) as well as an internal search engine function. This complexity means there are more steps to optimize. Plus, we’re relying on external providers to host the LLM, which can lead to issues with long latency and slow response times.

Read more on the challenges of using LLMs in customer support.

Let’s hone in on these challenges, what we’ve done to address them, and other learnings in more detail.

Perfecting UltimateGPT’s internal search engine

A lot of customers have asked us: why can’t we just connect OpenAI’s conversational bot, ChatGPT, to our tech stack directly? Well, we learned the hard way that letting an LLM loose in this way just doesn’t work. Instead, we use an approach called “open-book question answering”. This is where a search engine function is used to direct the LLM to a specific data set — your own customer service knowledge base — which serves as the factual basis for all generated answers.

“The search engine has to be near perfect. Because if the bot cannot find anything relevant to the query, then it will not be able to provide an answer.”

Meysam Asgari-Chenaghlu, Senior AI Researcher, Ultimate

This has been a top priority for our R&D team, and we’ve managed to build a robust retrieval system for gathering the correct information. But there’s still more work to be done. Customers might not ask questions exactly as the information is written in the KB — so this makes it difficult for the search engine to surface the right answer.

One unexpected outcome of the search engine approach is that it has allowed our test customers to gather valuable data on how useful the support articles in their knowledge base really are. This might be data around which articles are surfaced most regularly, which sections of these articles are most useful, and helps identify knowledge gaps.

Improving the bot’s accuracy

It doesn’t matter how delightful the conversational experience is — if your bot is serving up incorrect information to customers, they won’t be happy.

The information within a knowledge base might answer a question directly or indirectly. And in cases where there are only indirect answers, hallucinations are more likely to happen. So how did we tackle hallucinations?

First, we updated from a primitive to a more complex AI pipeline with additional steps. We also changed how we convert the information gathered from the knowledge base and index this within the search function — leading to more accurate responses.

“We spent a lot of time putting the genie back in the bottle and controlling the accuracy of the model. Putting in that control is the absolute first step you have to get right.”

Reetu Kainulainen, CEO and Co-founder, Ultimate

What else can you do to improve UltimateGPT’s accuracy? Simple: provide the bot with a higher quality data source. Here are some tips on how to edit your customer support knowledge base to get it in the best possible shape for generative AI.

Tailoring the user experience

One key learning is that different brands want to provide different user experiences. One customer wanted the model to only talk about support issues and not engage with any other topics. Another wanted the model to be able to talk about anything, allowing the bot to dish out pancake recipes as well as solve support issues — just as long as it didn’t mention their competitors.

“Drawing these sort of arbitrary boundaries in the LLM world is sometimes — or oftentimes — very difficult. You can give instructions about ‘do this, don’t do that’ and it may or may not follow your instructions.”

Jaakko Pasanen, Chief Science Officer and Co-founder, Ultimate

Soon we’re planning on enabling the bot to be able to generate answers on any topic — even if the relevant information isn’t available in your knowledge base. This would enable more playful use cases for our customers. But we’re still in the exploration phase and need to do more work around which questions should be answered freely and which would need to be grounded in knowledge base facts.

So what’s next?

Getting the factual accuracy of the model right is the first step. And we’re feeling pretty confident in that arena. The second (and much trickier) step is making it an incredible experience for customers. We’ll continue iterating and improving, but our conversational bot isn’t the only thing we’re working on.

Here are some of the generative AI highlights in our product roadmap:

- Extending UltimateGPT to ticket automation

- Generating expressions to speed up bot building and training

- Summarizing tickets before escalating to a human agent to save time and effort

And that’s not all. Meysam predicts that in the near future (to address the issues around relying on external LLM providers) we’ll see more specialized LLMs cropping up, that are designed to solve specific business problems.

The AI race is on. Stay tuned to see where we take UltimateGPT next.