Event

How to future-proof your support with generative AI

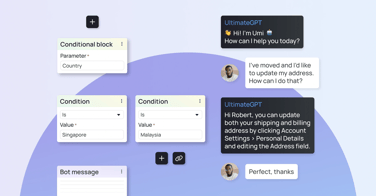

At this product launch event you'll learn how to combine generative AI and conversation design — to deliver better CX at scale.